Language-Guided Robot Task Interpretation

Using language models as a constrained interpretation layer that maps intent and scene summaries into predefined robot actions.

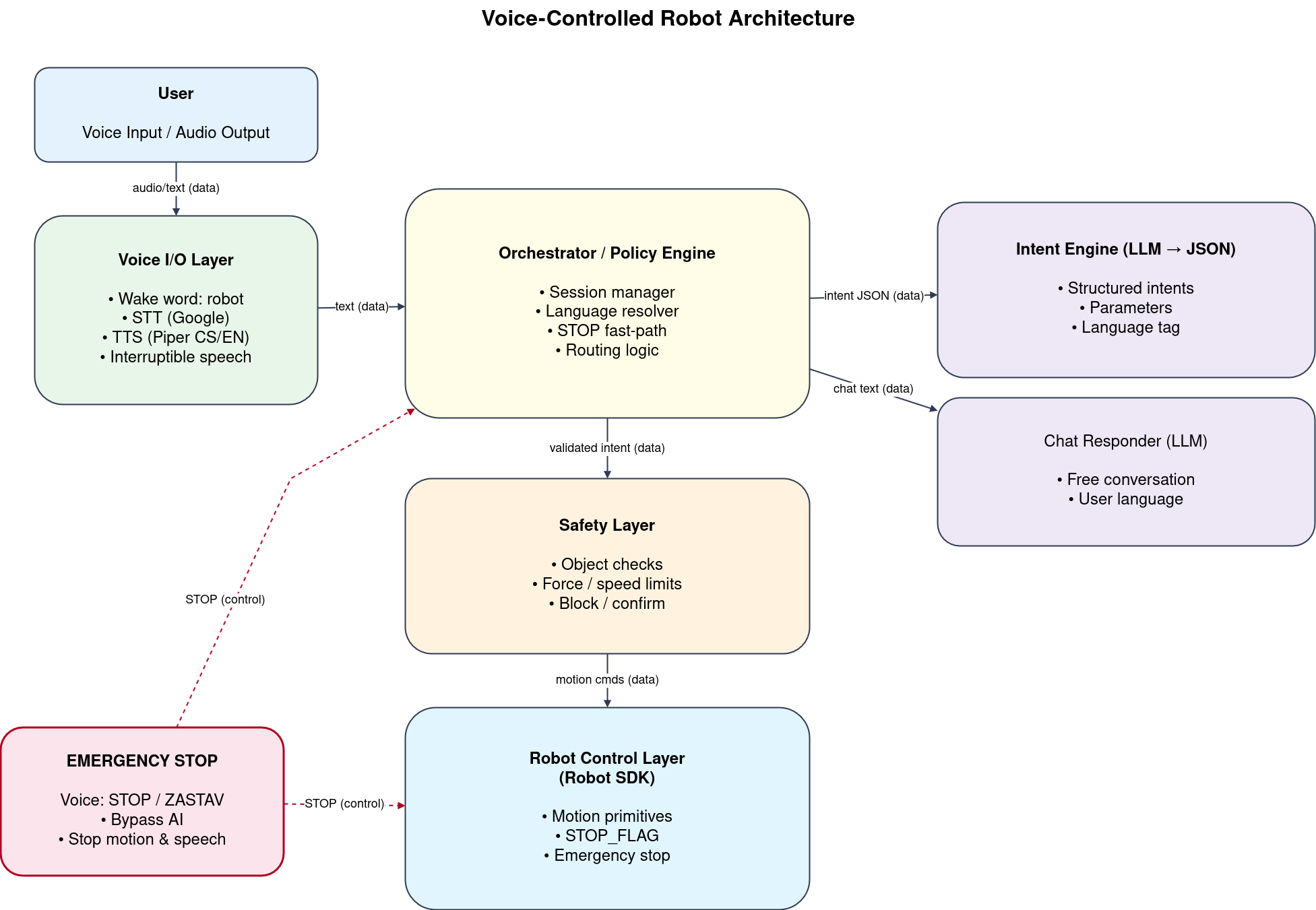

High-level architecture diagram for the voice-controlled robot.

Motivation

Controlling robots via natural language arises from the need for systems that are easy to use across a wide range of tasks. A frequently discussed question is whether industry truly benefits from natural-language-driven robot control, given that manufacturing environments are typically noisy and processes are tightly defined by production plans and cycle times.

Nevertheless, natural language control can be valuable in service robotics and in manufacturing scenarios where a high degree of flexibility is required. In such cases, interaction with human operators becomes essential. Natural language provides an intuitive way for operators to instruct robots directly, without requiring knowledge of robot programming or job definition.

To be effective, language-based commands must be constrained by the robot’s capabilities. This can be achieved through predefined robot skills (e.g., pick an item from a bin). Skill-based robot programming enables the use of natural language while maintaining predictable and safe robot behavior.

- Natural-language commands for easy robot operation

- No-code programming approach with intuitive robot operation

- Fast to deploy robots into the manufacturing processes

- Efficiently used with robot skills

System overview

The system was designed to meet user-defined requirements for natural language interaction. The user requested the ability to communicate with the robot using spoken language, including asking questions and issuing commands (e.g., describe the scene in front of you, pick an apple from the table).

The robot was expected to respond to questions related to products and services provided by the user. To support this functionality, a dedicated knowledge base was created for domain-specific topics. Questions outside this scope were handled through a general conversational fallback using the OpenAI API with internet access.

In addition to dialogue, the robot was required to execute simple tasks based on its predefined skills, triggered through natural language commands. To ensure safe operation, multiple constraints and validation checks were applied before task execution. An independent safety stop mechanism was implemented as a parallel process, allowing the robot to be halted at any time.

- Natural-language interface mapped to a predefined set of robot actions and parameters

- Scene descriptions used to assess feasible actions at a task level

- Language models are limited to interpretation; motion execution is handled by deterministic control layers

Key challenges addressed

- Constraining model outputs for predictability and safety

- Bridging language intent and executable robot actions

- Handling ambiguity via structured outputs rather than open-ended responses

What is intentionally not shown

Prompting strategy, model configuration, and evaluation details are intentionally omitted.